Yesterday I wrote an article for Crikey plus a post here based on Google Trends data which, it now appears, is dodgy.

Google Trends shows a steady decline in traffic to various websites since about September 2008, based on the metric “unique daily browsers”. But I was howled down. Everyone else’s metrics were not showing such a decline.

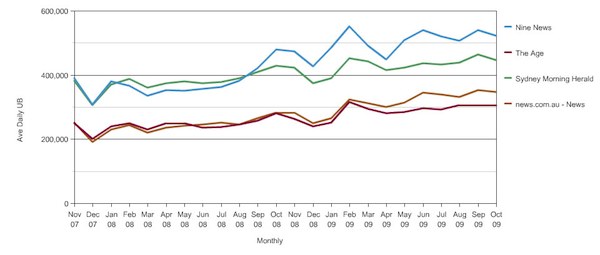

Indeed many, such as this chart of Nielsen NetRatings’ unique dailies, provided by Andrew Hunter (@Huntzie), Head of News, Sport and Finance at ninemsn, showed the exact opposite.

For example, news.com.au grew from 250,829 average daily unique browsers (UBs) in July 2008 to 346,367 in October 2009, a 38% increase. Not the roughly 50% drop shown by Google Trends.

Google says:

Trends for Websites combines information from a variety of sources, such as aggregated Google search data, aggregated opt-in anonymous Google Analytics data, opt-in consumer panel data, and other third-party market research. The data is aggregated over millions of users, powered by computer algorithms…

In other words, it’s some Google Secret Sauce. But has the sauce gone off?

The Google Trends forum is rather quiet. There were only three questions or comments posted for the whole of September, none of which received a reply, and nothing since. I can’t see that anyone from Google has responded to anything for months and months — I gave up looking back any further. Others have noted that Google Trends data differs wildly from Google’s own Analytics product — usually complaining that it shows significantly less traffic.

Google Trends is a Google Labs product, i.e. an experiment, I’m starting to think that it’s been abandoned and we’re just seeing a slow degradation due to lack of maintenance.

Meanwhile, I have changed my Twitter avatar to a goose for the rest of today.

One thing is certainly clear: There’s a lot of bullshit out there underpinning a billion dollar advertising industry.

“All communication is propaganda.” Head of news, sport and media at nine.msn, heh. Hmm, what’s that quote about statistics again?

Of all the publicly available online stats to use, Google Trends Websites (vs Google Trends search) is the worst: it has little to no relationship with actual stats, and I’m not even sure why they offer it. They say we do 10k a day….mmm, where have I heard someone working for Crikey claim that we don’t make any money and don’t count before? 😉

At worse case, use Alexa: I know they’re not particularly accurate, but it’s a good way to get long term trend data (which shows these sites being mostly flat) http://skitch.com/duncanriley/njfbc/news.com.au-site-info-from-alexa

@glengyron: As long as the bullshit is expressed as a number, and preferably a number that’s bigger for you than for your competitors, it doesn’t really matter what the number is nor how it was generated.

See also in mUmBRELLA today, In The Punch vs National Times debate, the missing metric is personality.

@Paul: Hah! Yes, the stats provided by ninemsn showed who as the topmost line on the chart? ninemsn of course.

Still, my critics have been relatively gracious. I won’t pick on them too much.

@Duncan Riley: This was the first time I’d explored Google Trends for Websites, and since it uses a different methodology I genuinely believed I was seeing results caused by that difference in methodology.

Well, in a sense I was! Just not the way I’d imagined… 🙂

Of course as you know, Sir, every traffic analysis tool is an estimate based on various assumptions. Sometimes people choose a particular tool ‘cos the reports look prettier, or it’s marketed better, or the results are more flattering.

This website, for example, has had either 16,306 unique visitors this month, or 61,490 or 7,036 — depending on whether I prefer AWStats or analog or Google Analytics. What’s a boy to believe?

i still think your conclusion may still have validity, despite an absence of hard data.

just because newspaper web stat graphs are going up (or down for that matter), does not mean they’re changing at a rate commensurate with, well, with anything – be it changes in website viewing behaviour, nor a migration of eyeballs from paper to web.

jokes about employees checking their Facebook every 6.5 minutes might sound funny, but they have a basis in reality.

dammit we just need better numbers! and as pointed out on other comments, there’s those who have various cookie- &/or ad- &/or script-blocking in place, shared IPs & proxies, etc.

but then, how is this any different to the scattergun shambles that’s been “ratings” and “readership” numbers in old-media since year dot? i asked this question of all my friends on Facebook just a year ago: “Who’s ever taken part in an TV ratings survey?”. 1 couple nearly 10 years ago *blank stare*

Murdoch is jumping up and down and making a fool of himself. He does not do that when he does not need to. So maybe there is an issue for their sites. Though it could just be they are not as profitable as they would like.

There’s a couple follow-ups over in Crikey‘s Comments, Corrections, Clarification and C*ck-ups column. Scroll down past all the “Liberals in turmoil” stuff.

@Anthony: Isn’t looking for conclusions without data, or despite the data, a bit like “Iraq has weapons of mass destruction”? 😉

That said, you do have a point. Even if all of the Google Trends data has a systematic error which means those news sites’ traffic figures are going up, it also means that Facebook and Twitter’s are rising even faster than the graphs show. That means people’s time spent would be shifting to social networking sites and away from news sites, though within a growing total audience.

I’d still like actual data to support that theory though.

I’m not so fussed by the old “Who do you know who’s ever been in the TV ratings?” chestnut. You only need a couple thousand people to get a reasonably representative sample. As this margin of error calculator shows, in Sydney’s population of 4 million, for instance, a sample of 3000 people gives you a MOE of 1.79 percentage points with standard 95% confidence.

That said, I’ve seen a radio presenter get upset by an audience drop of one percentage point between ratings surveys. No matter how much I or the head of audience research tried to convince him, he simply wouldn’t accept that it was probably statistical noise.

I’ve also seen this fall apart when people try to analyse demographic slices within this sample, forgetting that the MOE then relates not to the total sample and population sizes, but to the size of that individual slice of 26 to 30-year-old left-handed lesbians.

@yewenyi: I think there’s currently more theories about Rupert’s motives than atoms in the Sun.