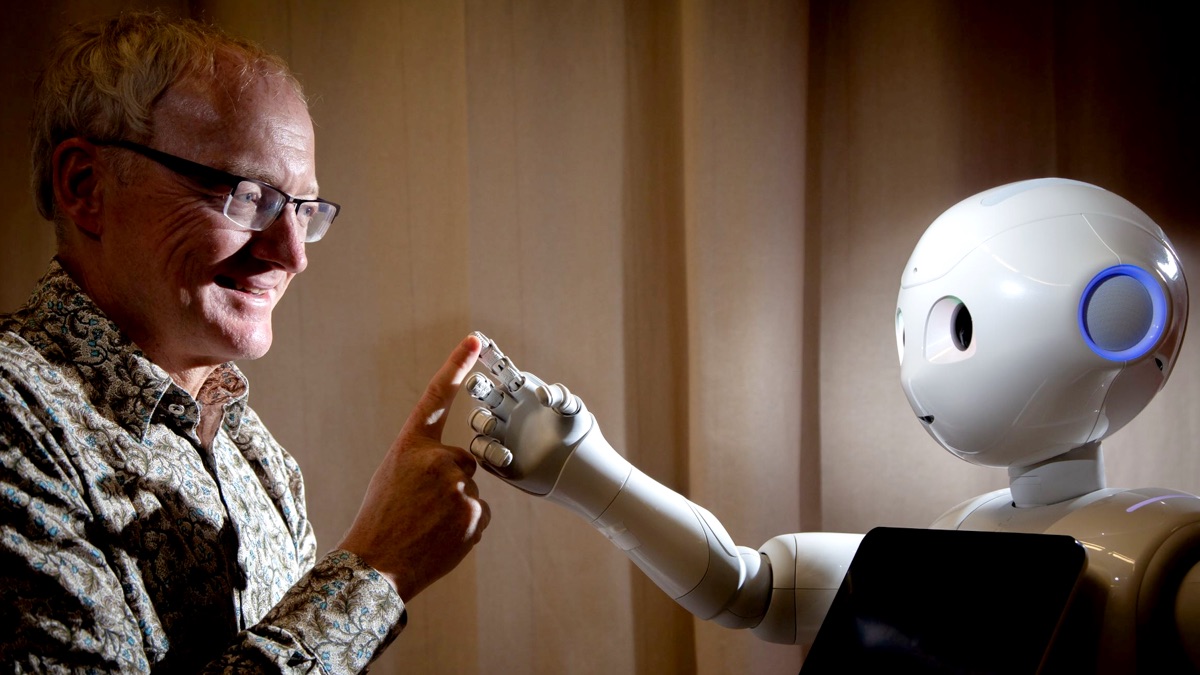

The first special-guest episode in the spring series is a fascinating chat about artificial intelligence, killer robots, and more with UNSW Scientia Professor Toby Walsh, chief scientist of the brand new UNSW AI Institute.

In this episode we talk about neural networks, the dangers of algorithmic policing, Google Duplex, cryptocurrency, HAL 9000 (obviously) and other disembodied computers, tribbles, whether red-eyed robots will take over the world, and even the Australian government’s illegal “robodebt” scheme.

This podcast is available on Amazon Music, Apple Podcasts, Castbox, Deezer, Google Podcasts, iHeartRadio, JioSaavn, Pocket Casts, Podcast Addict, Podchaser, SoundCloud, Spotify, and Speaker.

You can also listen to the podcast below, or subscribe to the generic podcast feed.

Podcast: Play in new window | Download (Duration: 1:07:17 — 61.6MB)

Episode Links

-

Toby Walsh FAA FACM FAAAS FAAAI FEurAI is a Laureate fellow, and professor of artificial intelligence in the UNSW School of Computer Science and Engineering at the University of New South Wales and Data61 (formerly NICTA). He has served as Scientific Director of NICTA, Australia's centre of excellence for ICT research. He is noted for his work in artificial intelligence, especially in the areas of social choice, constraint programming and propositional satisfiability. He has served on the Executive Council on the Association for the Advancement of Artificial Intelligence.

-

"Machines Behaving Badly" out now! Laureate Fellow & Scientia Professor of AI @ UNSW Sydney.

-

The flagship UNSW Research Institute in artificial intelligence, data science and machine learning.

-

Artificial intelligence (AI) is intelligence demonstrated by machines, as opposed to the natural intelligence displayed by animals and humans. AI research has been defined as the field of study of intelligent agents, which refers to any system that perceives its environment and takes actions that maximize its chance of achieving its goals.

-

Can we build moral machines? Toby Walsh, AI expert, examines the ethical issues we face in a future dominated by artificial intelligence.

-

Television commercial for a UNIVAC computer. Aired 5 February 1956 CBS.

-

UNIVAC (Universal Automatic Computer) was a line of electronic digital stored-program computers starting with the products of the Eckert–Mauchly Computer Corporation. Later the name was applied to a division of the Remington Rand company and successor organizations.

-

2062 is the year by which we will have built machines as intelligent as us. This is what the majority of leading artificial intelligence and robotics experts now predict. But what will this future actually look like? When the quest to build intelligent machines has effectively been successful, how will life on this planet unfold?

-

1960–1975: Researchers continued to join the field as the Association for Machine Translation and Computational Linguistics was formed in the U.S. (1962) and the National Academy of Sciences formed the Automatic Language Processing Advisory Committee (ALPAC) to study MT (1964). Real progress was much slower, however, and after the ALPAC report (1966), which found that the ten-year-long research had failed to fulfill expectations, funding was greatly reduced. According to a 1972 report by the Director of Defense Research and Engineering (DDR&E), the feasibility of large-scale MT was reestablished by the success of the Logos MT system in translating military manuals into Vietnamese during that conflict.

-

In machine learning, backpropagation (backprop, BP) is a widely used algorithm for training feedforward neural networks. Generalizations of backpropagation exist for other artificial neural networks (ANNs), and for functions generally.

-

Artificial neural networks (ANNs), usually simply called neural networks (NNs) or neural nets, are computing systems inspired by the biological neural networks that constitute animal brains.

-

[30 July 2021] Some have been used in hospitals, despite not being properly tested. But the pandemic could help make medical AI better.

-

[23 May 2016] There’s software used across the country to predict future criminals. And it’s biased against blacks.

-

[26 October 2017] Once the unknown and unaccountable process decides you're a potential future criminal, simply wearing the 'wrong' clothes and sitting in the 'wrong' train carriage can attract police attention.

-

[26 January 2021] Opposition is building against the ethically dubious practice of predictive or algorithmic policing, but in Australia and elsewhere it's something that the cops just don't want to talk about.

-

In the regulation of algorithms, particularly artificial intelligence and its subfield of machine learning, a right to explanation (or right to an explanation) is a right to be given an explanation for an output of the algorithm. Such rights primarily refer to individual rights to be given an explanation for decisions that significantly affect an individual, particularly legally or financially.

-

The Robodebt scheme, formally Online Compliance Intervention (OCI), was an unlawful method of automated debt assessment and recovery employed by Services Australia as part of its Centrelink payment compliance program. Put in place in July 2016 and announced to the public in December of the same year, the scheme aimed to replace the formerly manual system of calculating overpayments and issuing debt notices to welfare recipients with an automated data-matching system that compared Centrelink records with averaged income data from the Australian Taxation Office

-

[11 June 2021] A court has approved a $1.8 billion class action settlement in the Robodebt scandal. The judge described it as a shameful chapter, with innocent Australians on welfare treated like they were criminals.

-

[26 August 2022] Hundreds of thousands of people are hoping that some light will be shed on how the scheme — labelled a "massive failure" by Federal Court Justice Bernard Murphy — came to be.

-

Cyc (pronounced /?sa?k/ SYKE) is a long-term artificial intelligence project that aims to assemble a comprehensive ontology and knowledge base that spans the basic concepts and rules about how the world works. Hoping to capture common sense knowledge, Cyc focuses on implicit knowledge that other AI platforms may take for granted. This is contrasted with facts one might find somewhere on the internet or retrieve via a search engine or Wikipedia. Cyc enables semantic reasoners to perform human-like reasoning and be less "brittle" when confronted with novel situations.

-

In artificial intelligence, an expert system is a computer system emulating the decision-making ability of a human expert. Expert systems are designed to solve complex problems by reasoning through bodies of knowledge, represented mainly as if–then rules rather than through conventional procedural code. The first expert systems were created in the 1970s and then proliferated in the 1980s. Expert systems were among the first truly successful forms of artificial intelligence (AI) software. An expert system is divided into two subsystems: the inference engine and the knowledge base. The knowledge base represents facts and rules. The inference engine applies the rules to the known facts to deduce new facts. Inference engines can also include explanation and debugging abilities.

-

Reinforcement learning (RL) is an area of machine learning concerned with how intelligent agents ought to take actions in an environment in order to maximize the notion of cumulative reward. Reinforcement learning is one of three basic machine learning paradigms, alongside supervised learning and unsupervised learning.

-

AlphaGo is a computer program that plays the board game Go. It was developed by DeepMind Technologies a subsidiary of Google (now Alphabet Inc.). Subsequent versions of AlphaGo became increasingly powerful, including a version that competed under the name Master. After retiring from competitive play, AlphaGo Master was succeeded by an even more powerful version known as AlphaGo Zero, which was completely self-taught without learning from human games. AlphaGo Zero was then generalized into a program known as AlphaZero, which played additional games, including chess and shogi. AlphaZero has in turn been succeeded by a program known as MuZero which learns without being taught the rules.

-

[16 May 2022] The other day I discovered that all of the James Bond films are on Prime Video and available free as part of an Amazon Prime subscription. There’s a good few I haven’t seen, so every Tuesday night starting tomorrow I’ll watch one. You can join me.

-

[8 July 2022] Google Duplex is an unusual AI technology active in the (most) of the US, as well as a select group of countries around the world. At first it was narrowly focused on restaurant reservations, but its use has since expanded to other tasks.

-

[24 November 2018] Making a restaurant reservation with the Google Assistant.

-

[22 November 2018] VentureBeat tested Duplex in Google Assistant to make a restaurant reservation at Cafe Prague in San Francisco. Update on November 26: Google says the majority of Duplex calls via Google Assistant are automated, meaning a bot is speaking. The call we recorded, however, was part of the manual baseline, meaning a human was speaking the whole time.

-

[9 May 2022] The system can learn to perform tasks across new domains and industries, using real-time supervised training. Experienced operators perform this coaching as if an instructor watching a student’s work and sharing advice to ensure they accomplish tasks to the optimum standards. These operators can alter the system behaviors in real-time by observing it make phone calls in a new domain. Such a process continues until the system reaches the desired level of quality, at which point monitoring stops, and the system is free to handle the new task.

-

HAL 9000 is a fictional artificial intelligence character and the main antagonist in Arthur C. Clarke's Space Odyssey series. First appearing in the 1968 film 2001: A Space Odyssey, HAL (Heuristically programmed ALgorithmic computer) is a sentient artificial general intelligence computer that controls the systems of the Discovery One spacecraft and interacts with the ship's astronaut crew. While part of HAL's hardware is shown toward the end of the film, he is mostly depicted as a camera lens containing a red or yellow dot, with such units located throughout the ship.

-

David Gerrold (born Jerrold David Friedman; January 24, 1944) is an American science fiction screenwriter and novelist. He wrote the script for the original Star Trek episode "The Trouble with Tribbles", created the Sleestak race on the TV series Land of the Lost, and wrote the novelette "The Martian Child", which won both Hugo and Nebula Awards, and was adapted into a 2007 film starring John Cusack.

-

"The Trouble with Tribbles" is the fifteenth episode of the second season of the American science fiction television series Star Trek. Written by David Gerrold and directed by Joseph Pevney, it was first broadcast on December 29, 1967. In this comic episode, the starship Enterprise visits a space station that soon becomes overwhelmed by rapidly-reproducing small furry creatures called "tribbles."

-

When HARLIE Was One is a 1972 science fiction novel by American writer David Gerrold. It was nominated for the Nebula Award for Best Novel in 1972 and the Hugo Award for Best Novel in 1973... Central to the story is an artificial intelligence named H.A.R.L.I.E., also referred to by the proper name "HARLIE"—an acronym for Human Analog Replication, Lethetic Intelligence Engine (originally Human Analog Robot Life Input Equivalents).

-

[24 April 2012] At the 2012 Aussie Demo Jam, held as part of the SAP Inside Track Sydney and Mastering SAP Technologies event on March 25th 2012, I showcased a demo that used a Parrot AR.Drone helicopter, ABAP and the Siri voice activated assistant that comes on the latest Apple iPhone 4S.

-

A cautionary tale in artificial intelligence tells about researchers training an neural network (NN) to detect tanks in photographs, succeeding, only to realize the photographs had been collected under specific conditions for tanks/?non-tanks and the NN had learned something useless like time of day. This story is often told to warn about the limits of algorithms and importance of data collection to avoid “dataset bias”/?“data leakage” where the collected data can be solved using algorithms that do not generalize to the true data distribution, but the tank story is usually never sourced.

-

The Sonny Bono Copyright Term Extension Act – also known as the Copyright Term Extension Act, Sonny Bono Act, or (derisively) the Mickey Mouse Protection Act[1] – extended copyright terms in the United States in 1998. It is one of several acts extending the terms of copyrights.

-

[20 August 2022] NSW minister for customer service and digital government, Victor Dominello, has been praised for creating a world-first customer service experience with his evolution of Service NSW.

-

[16 July 2022] The validity of COVID-19 fines issued across NSW will be called into question in a court case that has the potential to invalidate millions of dollars worth of penalty notices issued during the state’s lockdowns.

-

21 June 2022] I woke up on Friday morning a pawn in a Kafka-esque story. Except I hadn’t been transformed into a chess piece but was a diplomatic pawn, a small player in a much larger international story. I read the news that I and 119 other “prominent” Australians were banned from travelling to Russia “indefinitely”.

-

The German S-mine (Schrapnellmine, Springmine or Splittermine in German), also known as the "Bouncing Betty" on the Western Front and "frog-mine" on the Eastern Front, is the best-known version of a class of mines known as bounding mines. When triggered, these mines are launched into the air and then detonated at about 1 meter (3 ft) from the ground. The explosion projects a lethal spray of shrapnel in all directions.

-

The Convention on the Prohibition of the Use, Stockpiling, Production and Transfer of Anti-Personnel Mines and on their Destruction of 1997, known informally as the Ottawa Treaty, the Anti-Personnel Mine Ban Convention, or often simply the Mine Ban Treaty, aims at eliminating anti-personnel landmines (AP-mines) around the world. To date, there are 164 state parties to the treaty. One state (the Marshall Islands) has signed but not ratified the treaty, while 32 UN states, including China, Russia, and the United States have not; making a total of 33 United Nations states not party.

-

The Phalanx CIWS (often spoken as "sea-wiz") is a gun-based close-in weapon system to defend military watercraft automatically against incoming threats such as aircraft, missiles, and small boats. It was designed and manufactured by the General Dynamics Corporation, Pomona Division, later a part of Raytheon. Consisting of a radar-guided 20 mm (0.8 in) Vulcan cannon mounted on a swiveling base, the Phalanx has been used by the United States Navy and the naval forces of 15 other countries.

-

The SGR-A1 is a type of autonomous sentry gun that was jointly developed by Samsung Techwin (now Hanwha Aerospace) and Korea University to assist South Korean troops in the Korean Demilitarized Zone. It is widely considered as the first unit of its kind to have an integrated system that includes surveillance, tracking, firing, and voice recognition.

-

On 4 August 2018, two drones detonated explosives near Avenida Bolívar, Caracas, where Nicolás Maduro, the President of Venezuela, was addressing the Bolivarian National Guard in front of the Centro Simón Bolívar Towers and Palacio de Justicia de Caracas. The Venezuelan government claims the event was a targeted attempt to assassinate Maduro, though the cause and intention of the explosions is debated. Others have suggested the incident was a false flag operation designed by the government to justify repression of opposition in Venezuela.

-

[November 2012] The U.S. government is surreptitiously collecting the DNA of world leaders, and is reportedly protecting that of Barack Obama. Decoded, these genetic blueprints could provide compromising information. In the not-too-distant future, they may provide something more as well—the basis for the creation of personalized bioweapons that could take down a president and leave no trace.

-

[9 October 2014] The work of this year’s winners of the Nobel Prize in Physics cannot be understated. As the Nobel Foundation said when they awarded the prize to Isamu Akasaki, Hiroshi Amano, and Shuji Nakamura — the three inventors for the blue light-emitting diode—“Incandescent light bulbs lit the 20th century; the 21st century will be lit by LED lamps.” But there’s more to this story. “The background is kind of being swept under the rug,” says Benjamin Gross, a research fellow at the Chemical Heritage Foundation in Philadelphia. “All three of these gentlemen deserve their prize, but there is a prehistory to the LED.” In fact, almost two decades before the Japanese scientists had finished the work that would lead to their Nobel Prize, a young twenty-something materials researcher at RCA named Herbert Paul Maruska had already turned on an LED that glowed blue.

-

Toby Walsh is one of the world’s leading researchers in Artificial Intelligence. He is a Professor of Artificial Intelligence at the University of New South Wales and leads a research group at Data61, Australia’s Centre of Excellence for ICT Research. He has been elected a fellow of the Association for the Advancement of AI for his contributions to AI research, and has won the prestigious Humboldt research award. He has previously held research positions in England, Scotland, France, Germany, Italy, Ireland and Sweden.

-

[14 September 2022] ‘Currently obsessed with the notion that Hans Niemann has been cheating at the Sinquefield Cup chess tournament using wireless anal beads that vibrate him the correct moves,’ tweeted one user.

-

Brazil is a 1985 dystopian black comedy film[9][10] directed by Terry Gilliam and written by Gilliam, Charles McKeown, and Tom Stoppard. The film stars Jonathan Pryce and features Robert De Niro, Kim Greist, Michael Palin, Katherine Helmond, Bob Hoskins, and Ian Holm.

If the links aren’t showing up, try here.

Thank you, Media Freedom Citizenry

The 9pm Edict is supported by the generosity of its listeners. You can throw a few coins into the tip jar or subscribe for special benefits. Please consider.

This episode it’s thanks once again to all the generous people who contributed to The 9pm Spring Series 2022 crowdfunding campaign.

CONVERSATION TOPIC: Gay Rainbow Anarchist and Richard Stephens.

THREE TRIGGER WORDS: Peter Sandilands, Peter Viertel, Phillip Merrick, Sheepie, and one person who chooses to remain anonymous.

ONE TRIGGER WORD: Andrew Groom, Bic Smith, Bic Smith (again), Bruce Hardie, Elana Mitchell, Errol Cavit, Frank Filippone, Gavin C, Joanna Forbes, John Lindsay, Jonathan Ferguson, Jonathan Ferguson (again), Joop de Wit, Karl Sinclair, Katrina Szetey, Mark Newton, Matthew Moyle-Croft, Michael Cowley, Miriam Mulcahy, Oliver Townshend, Paul Williams, Peter Blakeley, Peter McCrudden, Ric Hayman, Rohan Pearce, Syl Mobile, and four people who choose to remain anonymous.

PERSONALISED AUDIO MESSAGE: Mark Cohen and Rohan (not that one).

FOOT SOLDIERS FOR MEDIA FREEDOM who gave a SLIGHTLY LESS BASIC TIP: Andrew Kennedy, Benjamin Morgan, Bob Ogden, Garth Kidd, Jamie Morrison, Kimberley Heitman, Matt Arkell, Michael Strasser, Paul McGarry, Peter Blakeley, and two people who choose to remain anonymous.

MEDIA FREEDOM CITIZENS who contributed a BASIC TIP: Bren Carruthers, Elissa Harris, Opheli8, Raena Jackson Armitage, and Ron Lowry.

And another five people chose to have no reward, even though some of them were the most generous of all. Thank you all so much.

Series Credits

- The 9pm Edict theme by mansardian via The Freesound Project.

- Edict fanfare by neonaeon, via The Freesound Project.

- Elephant Stamp theme by Joshua Mehlman.